With our astonishing production of data on this planet every single second, are we underestimating the sheer size and what will it mean for consumers, business and regulation?

Most of us in the course of our day-to-day personal and entertainment usage don’t really think about where our data comes from or how we’re creating it. But what about those who have to know the where and how? This data creation causes a headache for the likes of data managers and analytical teams. How do they keep up with the sheer amounts of 1s and 0s coming at them every single second? And more importantly, how do they mould them to turn them into something worthwhile?

The growth of data and the impact it’ll have on consumers, businesses, marketing, management and legislation is particularly important to discuss, given the influx of data users and the initial emergence of big data refusing to slow down.

The numbers

We produce 2.5 quintillion bytes of data a day. That’s a lot. In 2021, the average smartphone user will chew through 8.9 gigabytes of data per month. Maybe those phone companies will have to re-evaluate that ‘All You Can Eat’ plan. The Internet ‘population’ has grown by 7.5% since last year, which consists of 3.7 billion people, nearly half of the world’s population.

Every minute the US alone produces over 2.5 million gigabytes of Internet data. According to Domo’s fifth edition of ‘Data Never Sleeps’, this includes 456,000 tweets, 4,146,600 watched YouTube videos, 3,607,080 Google searches and 15,220,700 texts sent. Staggeringly, around 90% of all data today was created in the last two years.

To put the inexorable growth into perspective further, Eric Schmidt, then-CEO of Google, declared in 2010, “There were five exabytes of information created between the dawn of civilization through 2003, but that much information is now created every two days.”

Needle in a haystack

HubSpot’s ‘The Ultimate List of Marketing Statistics’ compiles many of the global tech, search-engine and SEO players’ analysis of how consumers are searching for and finding information about brands online. This correlates with huge amounts of data generation from across the globe. In 2015, the media and entertainment company Mashable found that Google gets over 100 billion searches a month.

Research from the same year, conducted by Ascend2, a research-based marketing body, showed that 40% of marketers say the most challenging barrier to search engine optimisation (SEO) success is changing search algorithms. In other words, the most difficult part is altering search functionality to adapt to what people are looking for. With billions of searches conducted in such a short period of time, this is ever-changing.

Where does it all go?

Edward McDonnell, Director of CeADAR (Centre for Applied Data Analytics Research), a market-focused technology centre for innovation and applied research in big data analytics, says that the major firms understand the data-management landscape but the rest may not have the plans in place as of yet.

“The big corporations understand the power of the insights that come from big data and have big data science teams. Not all industry verticals are at the same stage of data science maturity. Some sectors are behind.”

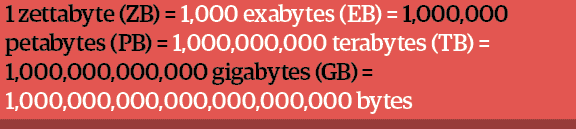

We hear the term ‘big data’ a lot, and for the right reasons: ‘big data’ describes the large volume of data that are made and collected along with producing outputs for businesses every single day. DXC Technology predicts that data production will be 44-times greater in 2020 than it was in 2009, and more than one-third of data created in 2020 will live in or pass through the cloud. Experts say there will be a 4,300% increase in annual data to 35 zettabytes between 2009 and 2020. The figures are impressive, but how do we manage all this?

“The volume of data being produced daily is growing at a massive rate. Technology has been in a race to keep up but we have had phenomenal innovation with data management technology and physical data centres”, says Laura Kennedy, Head of Data Science Consulting at Next Generation.

This data creation causes a headache for the likes of data managers and analytical teams. How do we keep up with the sheer amounts of 1s and 0s coming at us every single second?

In Ireland, some of the major players in the tech industry are looking to construct data centres in rural areas. Apple is currently in talks with the Irish government in relation to a potential €850 million centre located in Athenry, Galway. This has been on the cards since February 2015 but has been delayed due to environmental protests and pleas to establish the location at a different site.

With all this data bombarding our systems, one major aspect which may be overlooked is maintenance of hardware. The software working is all well and good but if your physical structures tumble your entire data collection structure is futile. In Ireland, however, maintaining machinery is a lot easier to implement.

“The cooling costs in these data centres are a lot less in Ireland, and of course we have quite a lot of wind energy as well”, says Edward.

This is a big draw for companies, as a major cost that could easily be overlooked is cut dramatically simply by where your centre is located.

Saying this, this new infrastructure will place a heavy toll on Ireland in energy use. According to EirGrid’s All-Island Generation Capacity Statement 2017-2026, “A key driver for electricity demand in Ireland for the next number of years is the connection of large data centres.”

Further research by engineering experts Tim Persoons and Justin A. Weibel showed that current data centres connected to the Irish national grid consume 250MW of electricity, 7.8% of the all-island total. This is expected to increase to double digits by 2018.

So, what do we do with the data?

Judging by expert analyses, the infrastructure is in place to handle the copious amounts of data and information we are generating every single second. The headaches for businesses lie in how to leverage it, access it, flush out what they don’t need, maintain hardware and reduce climate-change emissions through overusing energy.

Collection of data for organisations really boils down to marketing and how to leverage the collected data into something that’s beneficial and useful. This can be done through better advertising techniques, eliminating bad practices and incorporating technological advancements such as machine learning and artificial intelligencen into the business structure.

Big data is seen in our everyday lives and makes our work and product experience more efficient. Edward cited examples of everyday big data use in predicting the failure of sub-sea components in oil and gas pipelines, predicting the failure of mechanical parts in wind turbines, analysing customer turnover and segmenting your customer database in order to tailor for appropriate offers.

“We are barely tapping the surface of what we could do with the data we have now. The world is becoming more intelligent and connected but we have a lot of work to do before we can say we’re really getting the best out of data,” says Laura.

The software working is all well and good but if your physical structures tumble your entire data collection structure is futile

“The ‘Internet of Things’ is in its infancy,” notes Edward. “The world will become a lot more sensorised. Sensors are very cheap and reliable and the wireless infrastructure to connect them together is there. Just look at how many sensors are in your mobile phone.”

With sensors everywhere in an IoT society, from household appliances to being embedded in the infrastructure across smart cities, massive amounts of processing power will be required to analyse all this data and get useful insights from it.

“We also need to know what data we should keep and what we can get rid of,” warns Edward – a cautionary note for hoarders!

Data & marketing

According to the ‘10 Key Marketing Trends for 2017’ white paper conducted by IBM, there is a need to close the gap between marketing and advertising. Jay Henderson, Director of Strategy at IBM, says using data to leverage better advertising techniques would help flush out bad practices. These techniques include using marketing data to not only drive sales but to allow customers get the most out of their purchases through building relationships, customer communities and loyalty programmes.

Laura says Netflix is a prime example of a company knowing their customer base through data. “[Netflix] have done an amazing job of understanding their customers by analysing masses of customer data. By understanding their customers they are able to predict which shows will be popular with their viewers and now I believe they have over 100 million subscribers worldwide.”

Psychology enables marketers to tap into consumer behaviour and consumers’ habits. Now data is being used to enhance that. Loren McDonald, Marketing Evangelist/Customer Success, IBM, references ‘left-brain marketing’ driven by data, analytics and cognitive technologies. He says that applications involving data have been a key ingredient to marketing for decades. It’ll become even more integral as the emergence of machine learning and artificial intelligence will come into the fray in making marketing decisions going forward.

Some are already in a position to manage big data with aspects such as cloud storage and sensors but some industries need to catch up. We’ve never ignored the impact of data but we’ve never needed to take heed so much as of now. For all our sakes, be it on the customer or business side, it will affect us all and it is indelibly part of our lives.